We have all kinds of audio classification dataset,for example speech command dataset,

common voice dataset.Beside this there is North American voice dataset,African sound dataset,Asian audio

datasets,European voices dataset.

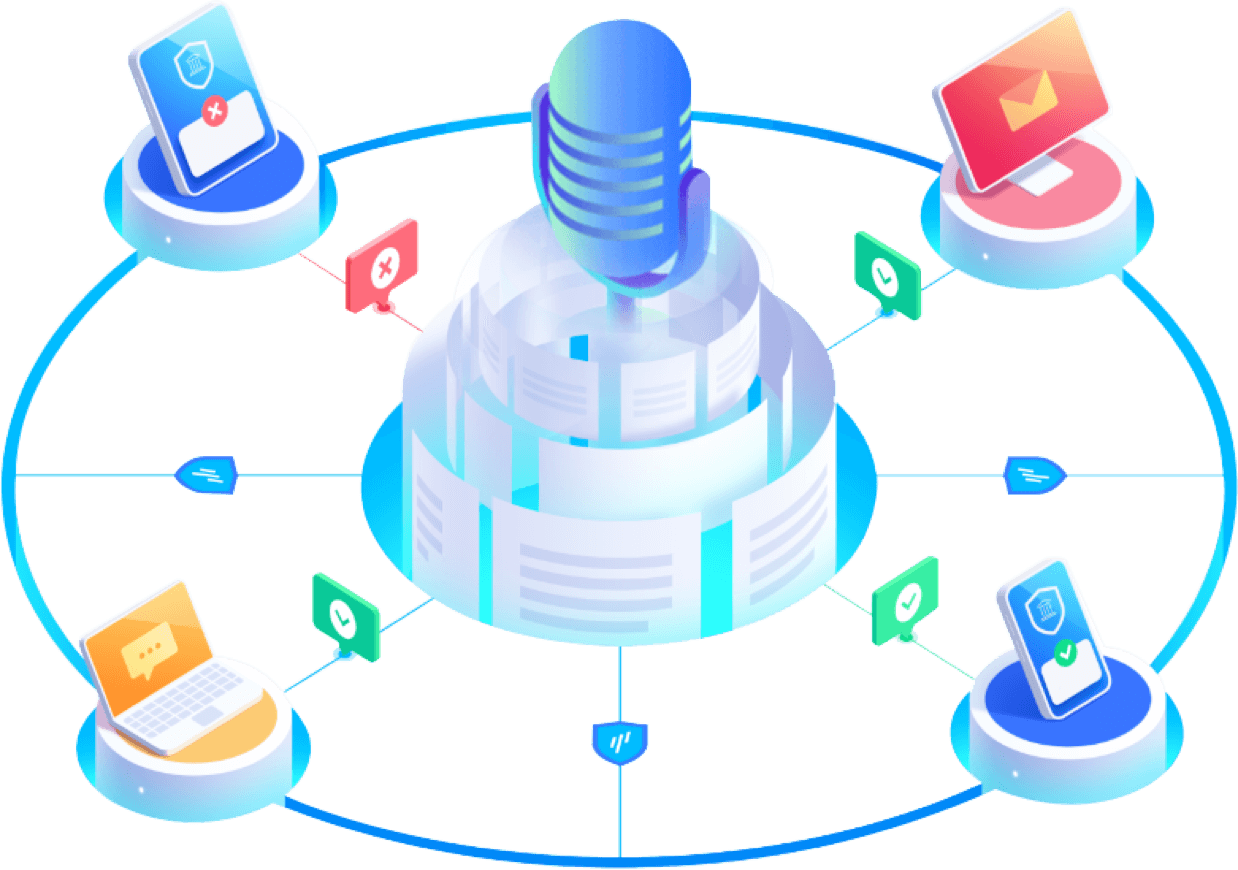

Environments

Devices

Computer (Desktop/Laptop)

Pro (Hi-Fi recorder/Mic Array)

Speakers

Language: Chinese/English/French/German…